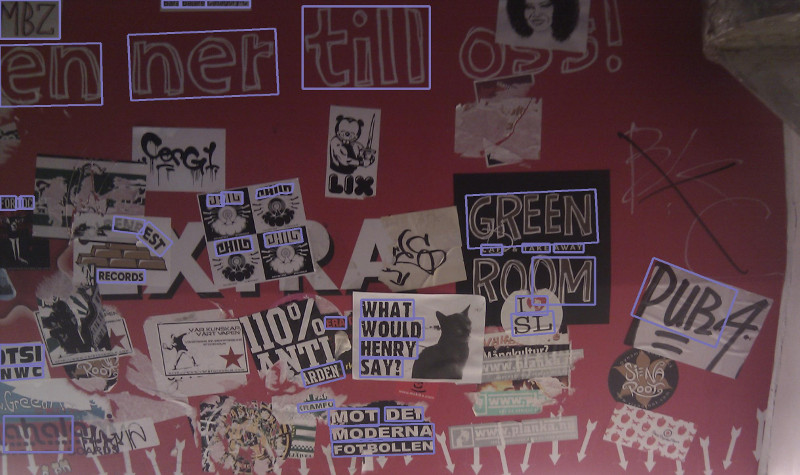

Read text in photos / images with complex backgrounds with this easy-to-use Python library. Based on deep learning (torchvision) models released by Clova AI Research .

from PIL import Image

from photo_ocr import ocr, draw_ocr

# (download example.jpg here: https://github.com/krasch/photo_ocr/blob/master/example.jpg)

image = Image.open("example.jpg")

# run the ocr

results = ocr(image)

print(results)

# draw bounding polygons and text on the image

image = draw_ocr(image, results)

# done!

image.save("example_ocr.jpg")Classic OCR tools like tesseract work best with scans of book pages / documents. A lot of manual image pre-processing is needed to get useful results out of these tools when running them on images that do not contain black-on-white text. In this case it is best to use tools that specialise on so-called "Scene text recognition" (e.g. photo_ocr).

photo_ocr processes an image in three stages:

photo_ocr is a wrapper around deep learning models kindly open-sourced by Clova AI Research.

For text detection, photo_ocr uses the CRAFT text detection model (paper, original source code). CRAFT has been released under MIT license (see file LICENSE_detection).

For text recognition, photo_ocr uses the models released in the Clova.ai text recognition model benchmark (paper, original source code). This collection of models has been released under Apache license (see file LICENSE_recognition).

The models have been trained on English words, but work well also for other languages that use a latin alphabet (see Troubleshooting for known issues). Other alphabets are currently not supported by photo_ocr.

Works with python 3.6, 3.7 and 3.8

photo_ocr works with torchvision >=0.7 and <=0.10. If there is a newer version of torchvision which is not yet supported by photo_ocr, please open a github issue to let us know!

git clone https://github.com/krasch/photo_ocr.git

python setup.py install

# check that everything is working

python example.py

All models are automatically downloaded the first time they are needed. The

models are stored locally in the standard pytorch model directory, which

you can change by setting the TORCH_HOME environment variable (see the official pytorch documentation for details).

(You can find a script containing all the snippets below at example.py)

The library takes as input a pillow / PIL image.

You can use PIL directly to read the image from file.

from PIL import Image

# (download example.jpg here: https://github.com/krasch/photo_ocr/blob/master/example.jpg)

image = Image.open("example.jpg")For convenience, photo_ocr also offers a load_image function, which

opens the image and rotates it according to the EXIF metadata, if necessary.

from photo_ocr import load_image

image = load_image("example.jpg")Just one simple function call to the ocr function:

from photo_ocr import ocr

results = ocr(image)The ocr function returns a list of all text instances found

in the image. The list is sorted by recognition confidence,

starting with the most confident recognition.

You can loop over the results like this:

for result in results:

# polygon around the text

# (list of xy coordinates: [(x0, y0), (x1, y1), ....])

print(result.polygon)

# the actual text (a string)

print(result.text)

# the recognition confidence (a number in [0.0, 1.0])

print(result.confidence)Since each entry in the results list is anamedtuple, you can

also loop over the results like this:

for polygon, text, confidence in results:

print(polygon)

print(text)

print(confidence)Use the draw_ocr method to draw the OCR results onto the original image.

from photo_ocr import draw_ocr

image = draw_ocr(image, results)

image.save("example_ocr.jpg")Use the detection function to only run the text detection step:

from photo_ocr import detection

# list of polygons where text was found

polygons = detection(image)

for polygon in polygons:

# polygon around the text

# (list of xy coordinates: [(x0, y0), (x1, y1), ....])

print(polygon)You can use the draw_detections function to draw the

results of the detection:

from photo_ocr import draw_detections

image = draw_detections(image, polygons)

image.save("example_detections.jpg")Use the recognition function to only run the text recognition step.

You need to supply an image that has already been cropped to a

text polygon. The text should be aligned horizontally.

from photo_ocr import load_image, recognition

# (download crop.jpg here: https://github.com/krasch/photo_ocr/blob/master/crop.jpg)

crop = load_image("crop.jpg")

text, confidence = recognition(crop)If you have a GPU, photo_ocr will automatically use it!

If you have multiple GPUs and want photo_ocr to use a different

one, you can set the CUDA_VISIBLE_DEVICES environment

variable, as shown below. Make sure that you import photo_ocr only after you

have set the environment variable!

import os

# if you have e.g. 4 GPUs, you can set their usage order like this

# (photo_ocr will only look at the first entry in the list

# and ignore the others, since it runs only one GPU)

os.environ["CUDA_VISIBLE_DEVICES"]="1,0,2,3"

# you can also choose to run on cpu despite having a GPU

# (=simply make no device visible to photo_ocr)

os.environ["CUDA_VISIBLE_DEVICES"]=""

# only import photo_ocr after you have set the environment variable

# otherwise photo_ocr will use the wrong GPU!

from photo_ocr import ocr| Example | Description | Reason | Solution |

|---|---|---|---|

|

Special letters (e.g. å, ö, ñ) are not recognized properly | The models have been trained on latin letters only. In most cases, the recognition still works well, with the model using similar-looking substitutes for the special letters. | Use a spellchecker after running text recognition to get the correct letters. |

|

Special characters (e.g. !, ?, ;) are not recognized properly | The default text recognition model supports only the characters a-z and 0-9. | Switch to the case-sensitive model, which also supports 30 common special characters. |

|

Text area is found, but text recognition returns only one-letter results (e.g. e, i, a) | The angle of the text is so steep, that the crop is being rotated in the wrong direction. | Rotate the input image by 90°. |

|

Text area is not found. | - | Try decreasing the confidence threshold. Alternatively, decrease the text_threshold_first_pass and text_threshold_second_pass. |

|

Text area is found where there is no text. | - | Try increasing the confidence threshold. Alternatively, increase the text_threshold_first_pass and text_threshold_second_pass. |

If photo_ocr is too slow for your use case, try first to identify if the detection or the recognition step (or both) are running slow on your images by running both steps in isolation (see above).

To speed up detection, try decreasing the image_max_size and/or the image_magnification. This will result in smaller images being fed to the detection model, resulting in a faster text detection. An unwanted side effect might be that smaller text areas are no longer found by the model in the smaller image.

To speed up recognition, try switching to a faster model. You might lose some recognition confidence, though. You can also try increasing the batch_size (this makes most sense if your images contain a lot of text instances).

| Name | Description | Values |

|---|---|---|

| confidence_threshold | Only recognitions with confidence larger than this threshold will be returned. | a float in [0.0, 1.0), default=0.3 |

results = ocr(image, confidence_threshold=0.3)For convenience, the ocr, detection, recognition methods

are pre-initialised with sensible defaults. If you want to change any of these parameters, you

need to initialise these methods again with your own settings (see initialisation code).

| Name | Description | Values |

|---|---|---|

| image_max_size | During image pre-processing before running text detection, the image will be resized such that the larger side of the image is smaller than image_max_size. | an integer, default=1280 |

| image_magnification | During image pre-processing before running text detection, the image will be magnified by this value (but no bigger than image_max_size) | a float ≥ 1.0, default=1.5 |

| combine_words_to_lines | If true, use the additional "RefineNet" to link individual words that are near each other horizontally together. | a boolean, default=False |

| text_threshold_first_pass | The CRAFT model produces for every pixel a score of howlikely it is that this pixel is part of a text character (called regions score in the paper). During postprocessing, only those pixels are considered, that are above the text_threshold_first_pass. | a float in [0.0, 1.0], default=0.4 |

| text_threshold_second_pass | See explanation of text_threshold_first_pass. During postprocessing, there is a second round of thresholding happening after the individual characters have been linked together to words (see link_threshold); detection_text_threshold_second_pass <= detection_text_threshold_first_pass | a float in [0.0, 1.0], default=0.7 |

| link_threshold | The CRAFT model produces for every pixels a score of how likely it is that this pixel is between two text characters (called affinity score in the paper). During postprocessing, this score is used to link individual characters together as words. | a float in [0.0, 1.0], default=0.4 |

| Name | Description | Values |

|---|---|---|

| model | Which recognition model to use, see the paper, in particular Figure 4. Best performance: TPS_ResNet_BiLSTM_Attn slightly worse performance but five times faster: model_zoo.None_ResNet_None_CTC case-sensitive: model_zoo.TPS_ResNet_BiLSTM_Attn_case_sensitive |

One of the initialisation functions in the photo_ocr.recognition.model_zoo, default=model_zoo.TPS_ResNet_BiLSTM_Attn |

| image_width | During image pre-processing, the (cropped) image will be resized to this width models were trained with width=100, other values don't seem to work as well | an integer, default=100 |

| image_height | During image pre-processing, the (cropped) image will be resized to this height; models were trained with height=32, other values don't seem to work as well | an integer, default=32 |

| keep_ratio | When resizing images during pre-processing: True -> keep the width/height ratio (and pad appropriately) or False -> simple resize without keeping ratio | a boolean, default=False |

| batch_size | Size of the batches to be fed to the model. | an integer, default=32 |

from photo_ocr import PhotoOCR

from photo_ocr.recognition import model_zoo

detection_params = {"image_max_size": 1280,

"image_magnification": 1.5,

"combine_words_to_lines": False,

"text_threshold_first_pass": 0.4,

"text_threshold_second_pass": 0.7,

"link_threshold": 0.4}

recognition_params = {"model": model_zoo.TPS_ResNet_BiLSTM_Attn,

"image_width": 100,

"image_height": 32,

"keep_ratio": False}

# initialise the photo_ocr object

photo_ocr = PhotoOCR(detection_params, recognition_params)

# optionally: make class methods available as global functions for convenience

ocr = photo_ocr.ocr

detection = photo_ocr.detection

recognition = photo_ocr.recognitionThis repository contains three license files:

| Filename | License | Owner | What does it cover? |

|---|---|---|---|

| LICENSE_detection.txt (Copy of original license) | MIT | NAVER Corp. | The model architectures in photo_ocr.detection as well as some of the postprocessing code. Also the detection model weights hosted on https://github.com/krasch/photo_ocr_models |

| LICENSE_recognition.txt (Copy of original license) | Apache 2.0 | original license file does not contain a copyright owner, but presumably also NAVER Corp. | The model architectures in photo_ocr.recognition as well as some of the postprocessing code. Also the recognition model weights hosted on https://github.com/krasch/photo_ocr_models |

| LICENSE.txt | Apache 2.0 | krasch | Everything else |